AR Video Recorder for VR

iOS app that records Oculus MRC content in AR.

- AR/VR

- Swift

- UI/UX

I made an iOS app to help me record video material to advertise my MoveMusic VR app. The app looks like a regular iOS camera app, but can connect to Meta (Oculus) Quest VR headsets to display and record AR-style video of the VR headset user in their VR space relative to the iOS device camera. The recordings produced by the app make it much easier to demonstrate my MoveMusic VR app because you can see both the person using VR, and the virtual objects around them. The app shows a realtime, composited AR visual in the camera viewfinder and is capable of recording 1920x1440 footage at 60 FPS to two separate video files, one for the iOS camera and one for the VR camera, so in post production they can be composited together at a higher quality. The app uses Meta's Oculus MRC system built into their VR headsets and is based on work by Fabio Dela Antonio from his Reality Mixer app. My recording app is not yet publicly available.

Recording of a MoveMusic performance captured with my AR recording app.

Background

At the time of making MoveMusic (2020-2022), there were few developer tools for standalone VR which allowed you to record a VR experience in augmented/mixed reality using a moving camera. Meta (Oculus) offers a solution called Mixed Reality Capture (Oculus MRC) that was intended to be used with a stationary DSLR camera connected to a PC. I was disappointed there was no official tool from Oculus which allowed recording with a moving camera. But, I came across an awesome open-source tool, Reality Mixer, created by an independent developer, Fabio Dela Antonio, which included a moving camera feature.

Development

When I tried using Reality Mixer's moving camera feature, I experienced significant visual latency of the VR imagery when moving the iOS device such that the VR visuals and real world visuals were not synchronized and the footage was not usable for me. But I was impressed at Reality Mixer's ability to use Apple's ARKit technology to 3D track the position of the iOS device relative to the VR world coordinates. I was convinced it would be possible to use ARKit and iOS to solve my problem.

Prototype 1

My initial idea to solve this problem was to use a DSLR camera as my main recording device and mount an iPhone on top of the camera to provide 3D position tracking of that camera. I was motivated to use a DSLR for high quality recording. Implementing the idea required both creating an iOS app to handle the 3D tracking and modifying Meta's open-source Oculus MRC OBS Plugin (Windows only 😭) which handles the communication with the VR headset and recording of video. The iPhone would track camera movement in 3D space, send the movement updates to the MRC OBS plugin on PC, which would then send the movement updates to the VR headset to render the current camera view. Indirect communication between the iPhone and VR headset was necessary because the Oculus MRC system only supports a single connection to send/receive MRC data and the OBS plugin was already handling that role 😭.

Regardless, I moved forward creating the prototype. To create the iOS app, I referencing Apple's ARKit example code and some of Reality Mixer's code. This was my first experience writing a Swift app and using ARKit. The iOS app was actually a joy to code as I began loving Swift and Apple's APIs are very high quality. To make my modifications to the OBS plugin I wrote networking code using Windows C++ networking APIs.

A recording produced using the first prototype recording system which used an iOS device mounted to a DSLR to provide camera tracking (3:06 - 3:59)

The results were not great, but slightly better than my initial experience with Reality Mixer. There was still significant visual latency albeit less, but I wanted to completely eliminate visual latency in the final recording. There were quite a few problems with this prototype:

- Usability was horrible.

- Setup required calibration using the Oculus MRC Capture tool on PC which sends camera calibration data to the VR headset, but this data was also needed on the iOS app to perform 3D camera tracking, so after calibrating you had to manually type 3D position and rotation values into the app.

- Required the use of a dedicated Windows PC (I bought a cheap used PC for this)

- Required bulky camera equipment

- Extra latency was caused by sending movement data from iPhone to PC to VR headset instead of directly from iPhone to VR headset.

Prototype 2

I was quite discouraged after the initial prototype and did not come back to this project for a few months since I did not have the time to dedicate to it. But, after finishing my work on SubLabXL, I had enough time to continue!

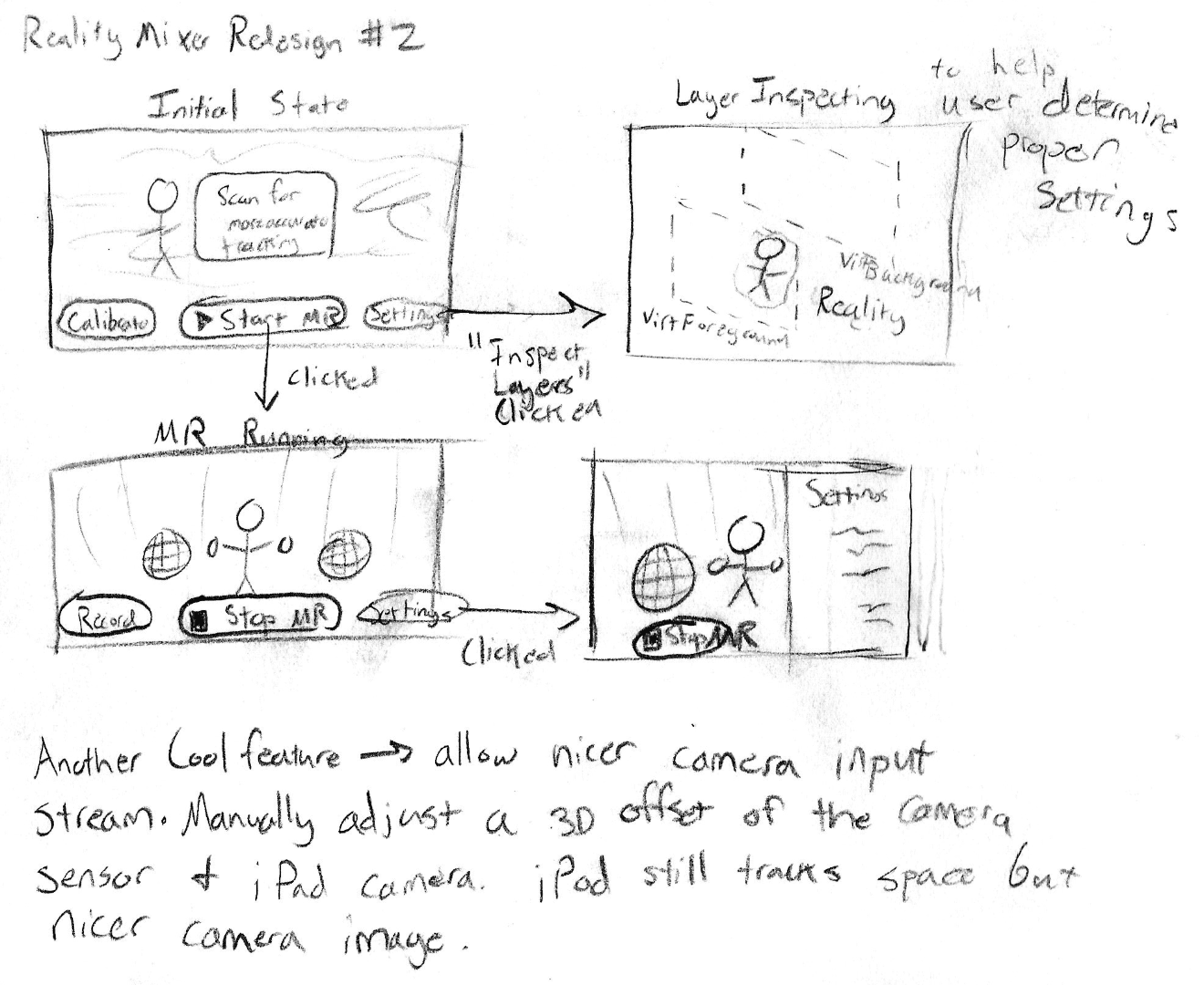

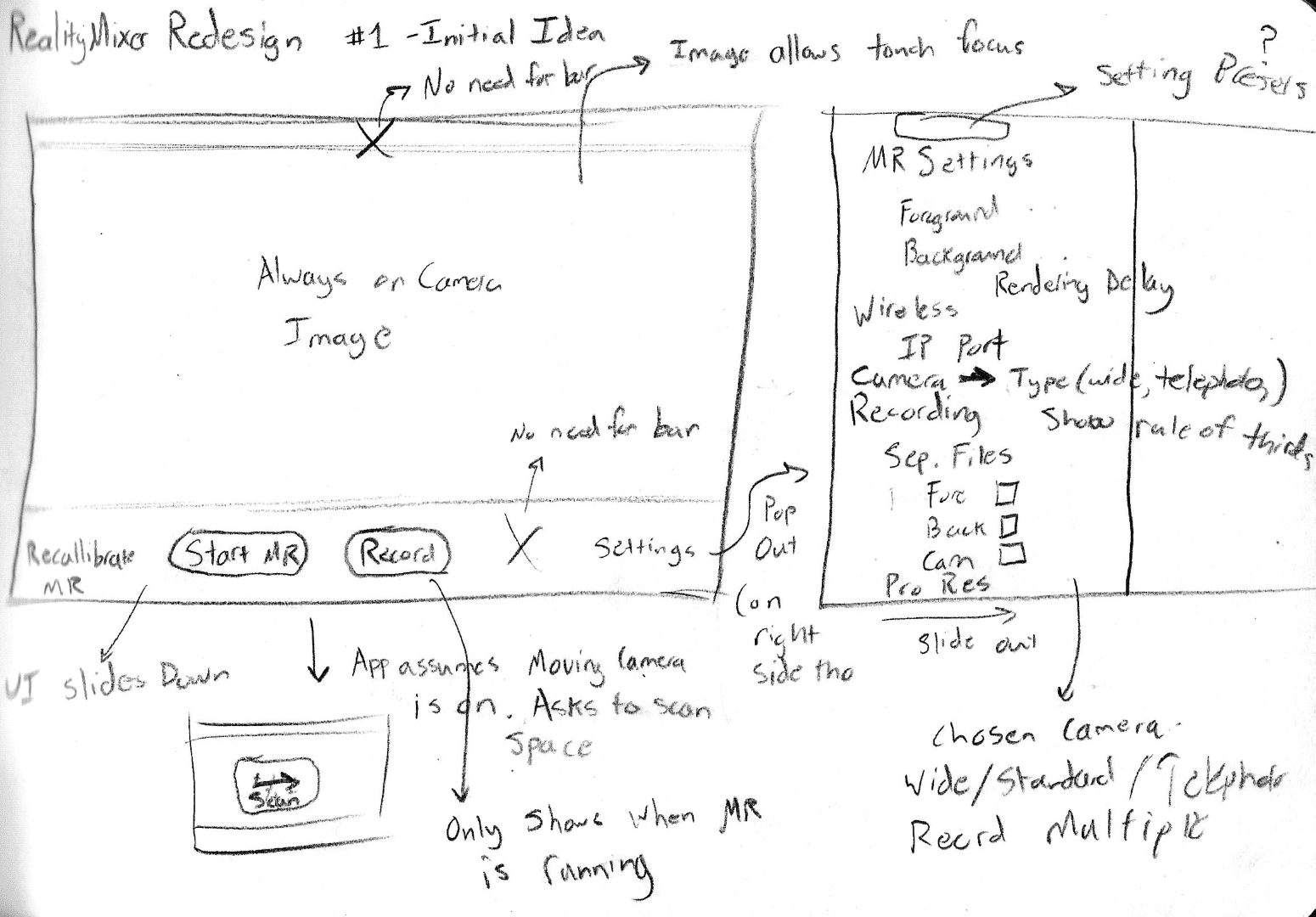

Two sketched ideas for the second prototype of the AR recording app.

After considering multiple ideas I decided it would be best to create an app that was closer in likeness to Reality Mixer, requiring only the VR headset and an iOS device. I began work on this prototype, starting with some Apple-provided template code and referencing Reality Mixer. My implementation solved these significant issues:

- Recording Resolution: Reality Mixer does not support video recording and suggests using iOS’ screen recording feature which limits video resolution to 1920x888. Publishing a VR app to the Meta store requires a video resolution of minimum 1920x1080, so I implemented a recording feature using Apple's AVFoundation APIs. It was straightforward to record the video feed from the iOS device's camera, but more difficult to record the h.264 video stream of the VR imagery since the Oculus MRC API does not provide any video frame time synchronization information. My recording implementation defaults to 1920x1440 and 60 FPS but can be configured to higher resolution at the cost of lower FPS. I've also been experimenting with an HDR recording option for the iOS camera.

- Recording separate video feeds: Recording separate video feeds of the real camera video and the VR imagery allowed us to use video editing software to composite the final video after recording so we could sync the timelines of the VR image and real-camera image layers to eliminate visual latency.

-

Ensuring best possible ARKit tracking: Reality Mixer instantiates a new ARKit view & session for each calibration and VR capture step and requires that the iOS camera stay stationary between both steps. This means that when using Reality Mixer, the user does not have a chance to pan the iOS device around them to allow ARKit to scan the space before calibration and position estimation occur. I surmise this can lead to a less accurate estimation of 3D position by ARKit because it only has a stationary understanding of the world around it. In my app, I use a single ARKit view & session throughout the app's lifetime, and these ARKit objects are instanced before calibration with VR even occurs. My app also uses ARKit's

ARCoachingOverlayViewto guide the user to pan around them to scan the space until ARKit reports it has a good 3D estimate. - Ensuring best possible network latency: Reality Mixer uses a 3rd party Swift package, SwiftSocket, to handle TCP connections, but Apple has an existing API for these connections called Network Framework. Assuming Network Framework would give me the best possible performance on iOS devices as it is most definitely optimized by Apple specifically for these devices, I implemented all networking code using Network Framework.

- Showing realtime AR-style visuals in camera viewfinder: Reality Mixer already demonstrated the ability to show VR visuals in the camera viewfinder, but to achieve an AR effect, I wrote shader code to process the VR visuals and present MoveMusic's VR objects as transparent on top of the iOS camera video. Since the VR imagery sent from MoveMusic appears as translucent objects on a black background, I used the luminance value of each pixel to inform the transparency value I would render. This also required extra implementation in the MoveMusic VR app detailed in a section below. With the optimizations of features 3. and 4. listed above, the viewfinder was able to show the VR visuals with low visual latency when moving the iOS device. I experienced even less latency using newer iOS hardware (iPhone 13), a VR resolution scale factor of 0.5x in the app settings, and setting the Quest VR headset to 120 Hz mode.

- UI rework: For a streamlined experience, I made the app feel like the built-in iOS camera app down to the recreation of the record button. I also displayed the full camera view with a rule-of-thirds guide instead of cropping the view to the screen boundaries. I cleaned up the UX flow of entering/exiting calibration and VR capture states by extracting Reality Mixer's repeated form-style pages into a single settings menu. Lastly, I added the ability to record multiple video takes in a single VR capture session, and allowed the VR capture state to be entered/exited without re-calibrating each time, even if the iOS device was moved between sessions.

Screen recording demonstration of my AR recording app and UI. I should've combed my hair 😂

The result was much better than I had anticipated! We were able to very rapidly create high quality AR-style video recordings of my MoveMusic app.

Required VR Implementation

Although the primary work for this project was creating the iOS app, the final result required some implementation in my MoveMusic VR app and modification to the game-engine source code that MoveMusic is built upon, the Oculus Integration for Unreal Engine 4. This Unreal Engine 4 (UE4) integration includes device-specific features for Meta's VR systems including the Oculus MRC capture system upon which my AR recording app relies.

In the Oculus UE4 integration, the Oculus MRC code captures two virtual camera images, a foreground image representing objects in front of the VR user, and a background image representing objects behind the VR user, and sends both over the network to the recorder app. The code uses UE4's SceneCapture2D to capture the VR imagery, but by default, SceneCapture2D does not render objects with translucent materials if there are no objects rendered behind those materials. Since my MoveMusic VR objects are translucent, I am unable to see them rendered in Oculus MRC's foreground VR image, but the objects do appear in Oculus MRC's background image with the inclusion of the black VR world background. To work around this issue, I ignore the MRC foreground VR image and only use the background image. To get rid of the black parts of the MRC background image and make the MoveMusic objects appear transparent as they do in VR, I use pixel luminance to determine transparency so that darker pixels are more transparent and brighter pixels are more opaque. In practice, this required me to write a shader in the AR recording app to display the VR image, and in video post production, a luma-keyer is required to composite the video. Additionally, as an optimization for MoveMusic VR, I completely disabled the capturing of the Oculus MRC foreground image since it is unused.

Another modification I made to the UE4 Oculus integration code was the addition of a feature to show/hide certain UE4 objects from the Oculus MRC camera feed. Without my modification, the MRC footage would render unneeded objects in the camera feed including the VR floor, controller models, and temporary pop-up UI windows. I was surprised Oculus (Meta) did not implement this feature for UE4 although they did implement it for their Unity engine integration. This modification surprisingly turned into a very time-consuming endeavor. The code that implements Oculus MRC in UE4 was very messy, variables were badly named, there was little documentation, and the whole system was hard to understand. This made me disappointed, but I understand that features like this which are only helpful to some developers are a low priority for a big business like Meta. I rewrote a decent amount of the Oculus MRC implementation for UE4 including the addition of delegate callbacks for various MRC-related events to prevent the need to keep a timer loop checking for changing MRC variables, which is the default expectation of the API.

Regardless of the many roadblocks, I am so glad I finally have an app to help me record marketing videos for my MoveMusic VR app! This project was probably one of the biggest trials I had to overcome in my venture with MoveMusic. It would not have been possible without the work of others like Fabio Dela Antonio, Apple, Meta, Epic Games, and many others.